twVLM demo

We conduct continual pretraining on ViT + twllm (70B) to create the first vlm that speaks traditional chinese.

This is an ongoing project. During development, we utilize a combination of VisionTransformer, MLP, and Taiwan Llama for our model architecture. Our current findings demonstrate that the model maintains instruction-following capabilities even without explicit prompt training. We attribute this behavior to Taiwan Llama’s inherent instruction-aligned nature from its pre-training.

The model exhibits reasoning skills. In the prompt we asked: Where is the bubble tea originate from? The model responded with "Taiwan"

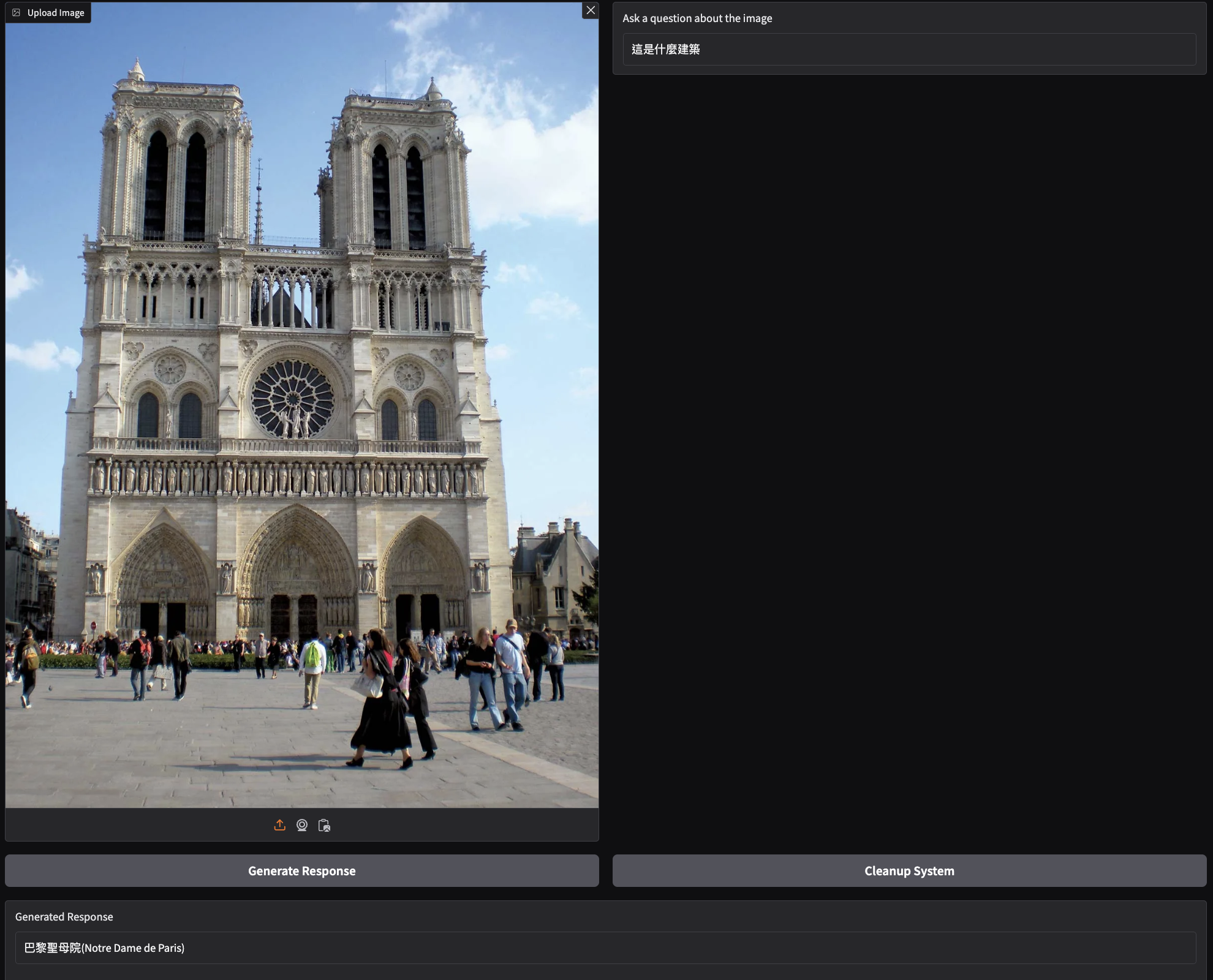

Left: Question: Where is this place? Answer: "University of Waterloo locates at Waterloo, Province of Ontario, Canada". Right: Question: What is this? Answer: Notre Dame de Paris.

Question: Where is this place? Answer: "Carnegie Mellon University is a private research university located in Pittsburgh, Pennsylvania, USA.".