BERT @ CGU

We explore the origins of BERT: why it was developed and how it revolutionized natural language processing. Followed by a hands-on workshop where students will build their own sentiment analysis model using BERT and PyTorch fundamentals.

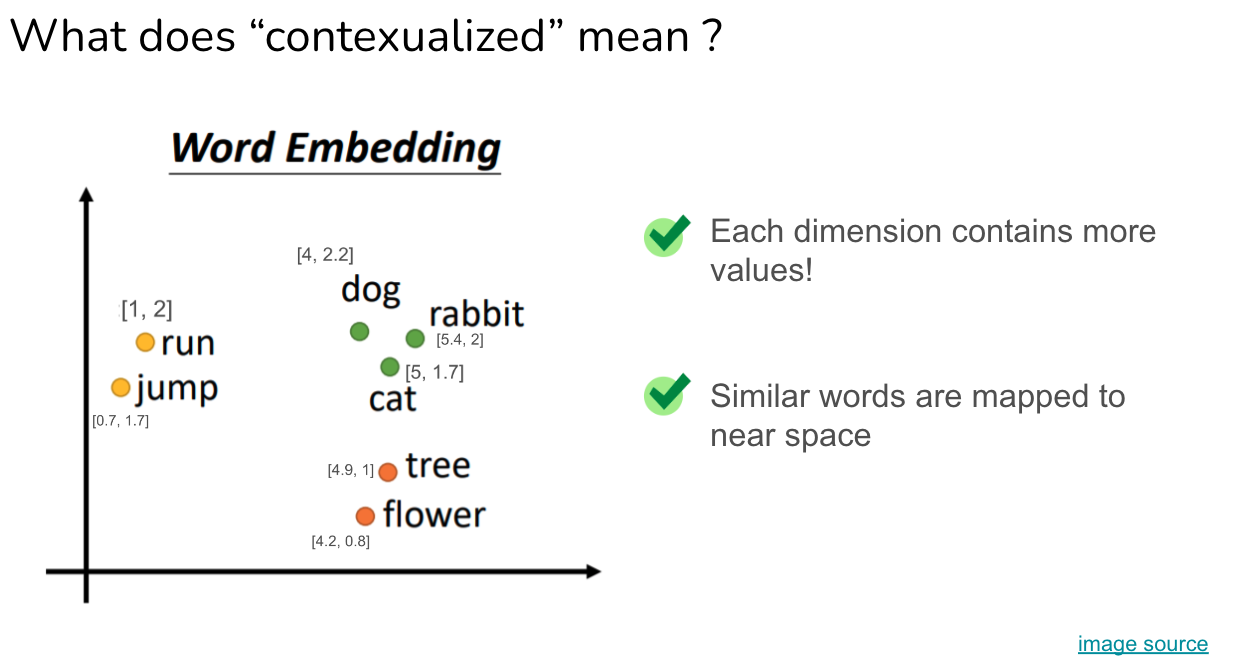

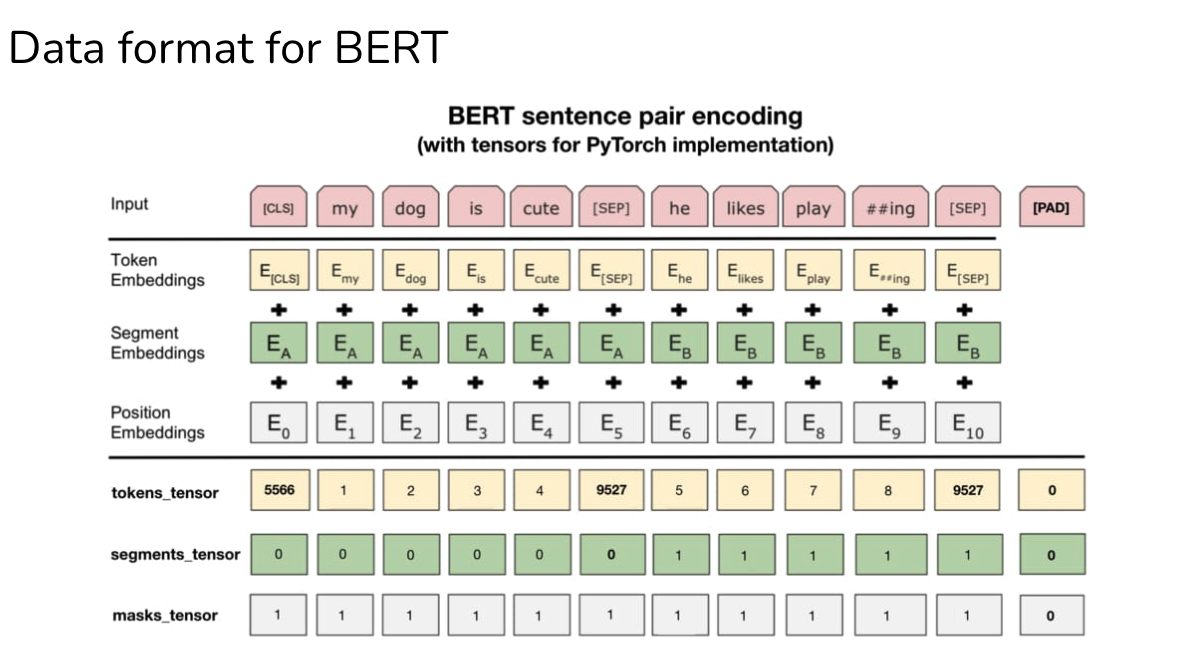

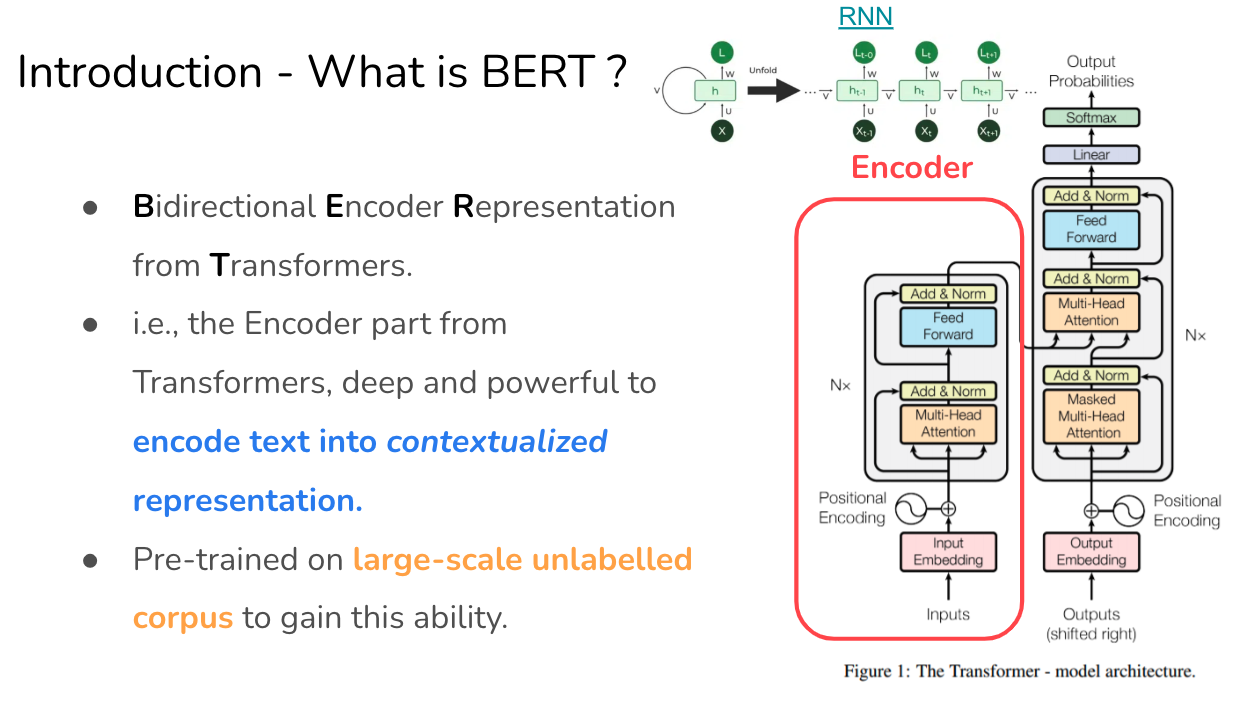

A comparison of traditional word embeddings and BERT’s contextualized embeddings. The left image illustrates basic word embeddings where each word has a fixed vector representation in a continuous space - similar words like ‘dog’, ‘cat’, and ‘rabbit’ cluster together, while semantically different words like ‘tree’ and ‘flower’ occupy different regions. The right image shows BERT’s (Devlin et al., 2019) more sophisticated encoding approach, where each token’s representation is dynamically influenced by its context. The visualization demonstrates how BERT combines token embeddings (E_token), segment embeddings (E_A/E_B), and positional embeddings (E_0 to E_10) to create context-aware representations, enabling the model to understand the same word differently based on its usage in different contexts.